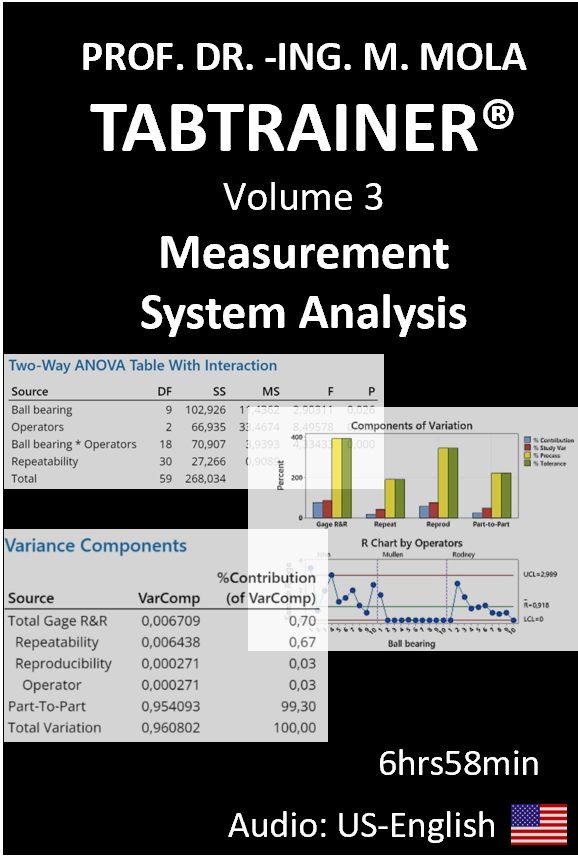

Minitab® Tutorial - TABTRAINER® VOLUME 3: MEASUREMENT SYSTEM ANALYSIS €99,99

ENROLL TRAINING COURSE

60-DAYS MONEY BACK GUARANTEE!

- 18 GAGE R&R STUDY CROSSED, PART 1

- 18 GAGE R&R STUDY CROSSED, PART 2

- 18 GAGE R&R STUDY CROSSED, PART 3

- 19 CROSSED MSA STUDY, NON-VALID

- 20 NESTED MSA GAGE R&R STUDY, PART 1

- 20 NESTED MSA GAGE R&R STUDY, PART 2

- 21 MEASUREMENT SYSTEM ANALYSIS: STABILITY AND LINEARITY

- 22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART), PART 1

- 22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART), PART 2

- 22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART), PART 3

- 23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS), PART 1

- 23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS), PART 2

- 23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS), PART 3

18 GAGE R&R STUDY CROSSED, PART 1

18 GAGE R&R STUDY CROSSED

In the 18th Minitab tutorial, we will look at the final assembly of Smartboard Company and accompany the quality team as they carry out a so-called measurement system analysis, Gage R&R study crossed. In the first part of our multi-part Minitab tutorial, we will first familiarize ourselves with the fundamentals in order to understand the most important definitions, such as measurement accuracy, repeatability, and reproducibility, as well as linearity stability and resolution. Well equipped with the fundamentals, we will then move on to the practical implementation of crossed measurement system analysis in the second part, and take the opportunity to learn the difference between crossed and nested measurement system analysis. We will learn that there are basically two mathematical approaches to performing crossed measurement system analysis: One is the ARM method and the other is the ANOVA method. In order to better understand both methods, we will first carry out our crossed measurement system analysis using the ARM method in the second part of this training unit and, for comparison, carry out a crossed measurement system analysis using the ANOVA method on the basis of the same data set in the third part of this training unit. We will actively calculate both methods manually step by step, and derive the corresponding measurement system parameters, in order to understand how the results were generated in the Minitab output window.

MAIN TOPICS MINITAB TUTORIAL 18, part 1

- National and international MSA standards in comparison

- Process variation versus measurement system variation

- measurement accuracy

- Repeatability versus Reproducibility

- Linearity, Stability, Bias

- Number of distinct categories, tolerance resolution

MAIN TOPICS MINITAB TUTORIAL 18, part 2

- ARM analysis approach as part of a crossed measurement system analysis

- Difference between crossed, nested and expanded MSA

- Manual derivation of all scattering components according to the ARM method

- Manual derivation of the tolerance resolution based on the ndc parameter

- Operator-related R-chart analysis

- Manual derivation of the control limits in the R-chart

- Operator-related Xbar-chart analysis

- Manual derivation of the control limits in the Xbar-chart

- Individual value diagram for analyzing the data scatter

- Operator-dependent boxplot analysis

- Interpretation the Gage R&R Report

- Set ID variables in the Gage R&R Report

MAIN TOPICS MINITAB TUTORIAL 18, part 3

- ANOVA analysis approach as part of MSA crossed

- 2-way ANOVA table with interactions

- Manual derivation of all scattering components according to ANOVA method

- Manual calculation of the ndc-parameter

- Hypothesis test within the framework of the ANOVA method

- Hypothesis test regarding interaction effects

Trailer

18 GAGE R&R STUDY CROSSED, PART 2

MAIN TOPICS MINITAB TUTORIAL 18, part 2

- ARM analysis approach as part of a crossed measurement system analysis

- Difference between crossed, nested and expanded MSA

- Manual derivation of all scattering components according to the ARM method

- Manual derivation of the tolerance resolution based on the ndc parameter

- Operator-related R-chart analysis

- Manual derivation of the control limits in the R-chart

- Operator-related Xbar-chart analysis

- Manual derivation of the control limits in the Xbar-chart

- Individual value diagram for analyzing the data scatter

- Operator-dependent boxplot analysis

- Interpretation the Gage R&R Report

- Set ID variables in the Gage R&R Report

18 GAGE R&R STUDY CROSSED, PART 3

MAIN TOPICS MINITAB TUTORIAL 18, part 3

- ANOVA analysis approach as part of MSA crossed

- 2-way ANOVA table with interactions

- Manual derivation of all scattering components according to ANOVA method

- Manual calculation of the ndc-parameter

- Hypothesis test within the framework of the ANOVA method

- Hypothesis test regarding interaction effects

19 CROSSED MSA STUDY, NON-VALID

19 CROSSED MSA STUDY, NON-VALID

In the 19th Minitab training tutorial, we are back in the final assembly of Smartboard Company. Analogous to the previous training unit a crossed measurement system analysis will also be carried out, but with the difference that in this case we will get to know a measurement system, that is to be classified as an unacceptable measurement system in terms of the standard specifications. The core objective of this Minitab tutorial will be, to work out the appropriate procedure for identifying the causes of the inadequate measurement system, on the basis of the existing poor-quality criteria. In this context, we will also get to know other useful functions for day-to-day business, such as the creation of the Gage R and R study Worksheet layout for the actual data acquisition as part of the measurement system analysis, or the very useful graphic „gage run chart“, which is not automatically generated by default as part of a measurement system analysis. We will see, that we can generate this measurement run chart very easily and thus obtain further additional information, in order to derive specific improvement measures for the non-valid measurement system.

MAIN TOPICS MINITAB TUTORIAL 19

- Crossed Gage R&R measurement system analysis

- Create Gage R&R study worksheet layout

- Measurement system variation in relation to the customer specification limits

- Manual derivation of the control limits in the R-Chart

- Analysis of the interaction effects by using the interaction plot

- Analysis of error classifications

- R-Chart and Xbar-Chart as part of the measurement system analysis

- Single value plot as part of the measurement system analysis

- Boxplots as part of the measurement system analysis

- Working with identification variables and marking palette

- Interaction plot between test object and operators

- Working with the Gage run chart

Trailer

20 NESTED MSA GAGE R&R STUDY, PART 1

20 NESTED MSA GAGE R&R STUDY

In the 20th Minitab tutorial, we are at the axle Test bench laboratory of the smartboard company. This is where the dynamic load properties of the skateboard Axles produced are examined. The skateboard axles are subjected to a dynamically increasing oscillating stress on the axle test bench, until the load limit is reached and the axle breaks. For us this means, that this time we are dealing with destructive material testing and therefore the respective test parts cannot be tested several times and therefore for example, repeatability or reproducibility, cannot be tested several times to determine the important measurement system parameters. In this Minitab tutorial, we will therefore get to know the so-called nested gage R&R study, as the method of choice, in order to understand which conditions must be met as a basic prerequisite for a nested measurement system analysis to work. In this context, we will apply the industry-proven 40:4 rule, to ensure a sufficient sample size for a nested measurement system analysis. And using appropriate hypothesis tests as part of the nested measurement system analysis, we will evaluate, whether the testers or the production batches have a significant influence on the scattering behavior of our measurement results. We will also get to know the important ndc parameter, as a quality measure for the resolution of our measurement system. We will learn to understand how we can use this key parameter to assess, whether the resolution of our measuring system is sufficiently high in terms of the standard to be used, in the context of process optimization. Using the corresponding variance components, we will then work out whether the measurement system scatter makes up an impermissibly high proportion of the total scatter, and is therefore possibly above the permissible total scatter according to the standard specification. We will examine the scattering behavior of the testers to determine whether and, if so, which of the testers makes the strongest contribution to the measurement system scatter. In this context, we will use useful graphical representations such as quality control charts and box plots, to visually identify anomalies in the scattering behavior. Based on the identified causes, we will finally be able to derive reliable recommendations for action to improve the measurement system and will also carry out a new measurement system analysis after the recommended improvement measures. The results of our improved measurement system will then be compared with the results of the original measurement system.

MAIN TOPICS MINITAB TUTORIAL 20, part 1

- Nested measurement system analysis

- Boundary conditions for a nested measurement system analysis

- Principle of homogeneity in the context of a nested measurement system analysis

- Repeatability as part of a nested measurement system analysis

- Reproducibility as part of a nested measurement system analysis

- Interpretation of the Gage R&R nested report

MAIN TOPICS MINITAB TUTORIAL 20, part 2

- Weak point analysis of an invalid measuring system

- Variance components

- R-Chart and Xbar chart

- single value plot

- Boxplot and scatter plot

- Carrying out a second nested measurement system analysis

Trailer

20 NESTED MSA GAGE R&R STUDY, PART 2

MAIN TOPICS MINITAB TUTORIAL 20, part 2

- Weak point analysis of an invalid measuring system

- Variance components

- R-Chart and Xbar chart

- single value plot

- Boxplot and scatter plot

- Carrying out a second nested measurement system analysis

21 MEASUREMENT SYSTEM ANALYSIS: STABILITY AND LINEARITY

21 MEASUREMENT SYSTEM ANALYSIS: STABILITY AND LINEARITY

In the 21st Minitab tutorial, we accompany the ultrasonic testing laboratory of Smartboard Company. In this department, the manufactured skateboard axles are subjected to ultrasonic testing to ensure, that no undesirable cavities have formed in the axle material during axle production. In materials science, cavities are microscopically small, material-free areas which above a certain size can lead to a weakening of the material and thus to premature axle breakage even under the slightest stress. The ultrasonic testing used by Smartboard Company for this axle test is one of the classic non-destructive acoustic testing methods in materials testing, and is based on the acoustic principle that sound waves are reflected to different degrees in different material environments. Depending on the size of the cavity in the axis material, these sound waves are then reflected back to the ultrasonic probe to varying degrees. The size and position of the cavity in micrometers is calculated from the time it takes for the emitted ultrasonic echo to be reflected back to the probe. The focus of this Minitab tutorial is to evaluate the ultrasonic testing device with regard to the measurement system criteria of linearity and stability, in order to detect any systematic measurement deviations. Specifically, we will learn how the stability and linearity parameters can be used to find out how accurately the ultrasonic device can measure over the entire measuring range. For this purpose, the ultrasonic testing team will randomly select ten representative skateboard axles, based on the recommendations of the AIAG standard regulations, and subject them to ultrasonic testing to determine the size of any cavities in the axle material. Before we get into the stability and linearity analysis, we will first get to know the useful function of variable counting, as part of data management. We will then apply appropriate hypothesis tests to identify significant anomalies in terms of linearity and stability. We will use the useful graphic scatter plot, to give us a visual impression of the trends and tendencies, with regard to linearity and stability, so that we can make a statement about the existing measurement system stability and linearity, on the basis of the corresponding quality criteria and the regression equation. Finally, we will carry out an optimization of the measurement system based on our analysis results, and then reassess whether the implemented optimization measures have improved our measurement system stability and linearity.

MAIN TOPICS MINITAB TUTORIAL 21

- Measuring system stability and linearity, fundamentals

- Analysis of the measuring system stability

- Analysis of the measuring system linearity

- “Tally individual variables“ function

- Linearity analysis by using the regression equation

- Correction of the systematic measurement deviations

Trailer

22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART), PART 1

22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART)

In the 22nd Minitab tutorial, we accompany the final inspection station of Smartboard Company. Here the skateboards assembled in the early late and night shifts, are subjected to a final visual surface inspection before being shipped to the customer, and declared as a good or bad part depending on the amount of surface scratches. Skateboards with a „GOOD“ rating are sent to the customer, while skateboards with a „BAD“ rating have to be scrapped at great expense. One employee is available for the visual surface inspection in each production shift, so that in three production shifts a total of three different surface appraisers classify the skateboards as „GOOD“ and „BAD“. Our task in this Minitab tutorial will be to check whether all three appraisers have an identical understanding of quality, with regard to repeatability and reproducibility, in their quality assessments. In contrast to the previous training units, however, in this training unit we are no longer dealing with continuously scaled quality assessments, but with the attributive quality assessments „good part“ and „bad part“. Before we get into the measurement system analysis required for this, we will first get an overview of the three important scale levels nominal, ordinal, and cardinal scale. And create the useful measurement protocol layout function, for our agreement check. We will then use the complete data set to analyze the appraiser matches, and evaluate the corresponding match rates using the so-called Fleiss Kappa statistic, and the corresponding Kappa values. We will actively calculate the principle of the so-called Kappa statistic, or Cohen’s Kappa statistic, by using a simple data set and understand how the corresponding results appear in the output window. We will learn how the Kappa statistic helps us to obtain a statement for example, about the probability that a match rate achieved by the appraisers could also have occurred at random. We will first learn to evaluate the agreement rate within the appraisers using Kappa statistics and then see how the final inspection team also uses Kappa statistics to evaluate the agreement of the appraisers’ assessments with the customer requirement. And we will be able to find out whether an inspector tends to declare actual bad parts as good parts or vice versa. After the compliance tests in relation to the customer standard, we will then examine how often not only the appraisers agreed with each other, but also how well the Agreement rate of the appraiser team as a whole can be classified in relation to the customer standard. With these findings, we will then be in a position to make appropriate recommendations for action, for example to achieve a uniform understanding of quality in line with customer requirements as part of appraiser training. In this context, we will also become familiar with the two very useful graphical forms of presentation Agreement of assessments within the appraisers, and Appraisers compared to the standard. We can verify that these graphs are always very helpful especially in day-to-day business, for example to get a quick visual impression of the most important information regarding our attributive agreement analysis results.

MAIN TOPICS MINITAB TUTORIAL 22, part 1

- Scale levels, fundamentals

- Nominal, ordinal, cardinally scaled data types

- Discrete versus continuous data

MAIN TOPICS MINITAB TUTORIAL 22, part 2

- Sample size for attributive MSA according to AIAG

- Appraiser agreement rate for attributive data, principle

- Create measurement report layout for appraiser agreement

- Performing the appraiser agreement analysis for attributive data

- Analysis of agreement rate within the appraisers

- Fleiss-Kappa and Cohen-Kappa statistics

MAIN TOPICS MINITAB TUTORIAL 22, part 3

- Analysis of appraiser versus standard compliance

- Assessment of appraiser agreement based on the Fleiss-Kappa statistic

- Kappa statistic for assessing the coincidental match rate

- Analysis of the mismatch of the appraiser

- Graphical MS analysis within the appraisers

- Graphical MS appraiser analysis compared to the customer standard

Trailer

22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART), PART 2

MAIN TOPICS MINITAB TUTORIAL 22, part 2

- Sample size for attributive MSA according to AIAG

- Appraiser agreement rate for attributive data, principle

- Create measurement report layout for appraiser agreement

- Performing the appraiser agreement analysis for attributive data

- Analysis of agreement rate within the appraisers

- Fleiss-Kappa and Cohen-Kappa statistics

22 ATTRIBUTIVE AGREEMENT ANALYSIS (GOOD PART, BAD PART), PART 3

MAIN TOPICS MINITAB TUTORIAL 22, Part 3

- Analysis of appraiser versus standard compliance

- Assessment of appraiser agreement based on the Fleiss-Kappa statistic

- Kappa statistic for assessing the coincidental match rate

- Analysis of the mismatch of the appraiser

- Graphical MS analysis within the appraisers

- Graphical MS appraiser analysis compared to the customer standard

23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS), PART 1

23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS)

In the 23rd Minitab tutorial, we are in the final assembly department of Smartboard Company. Here in the early late and night shifts, all the individual skateboard components are assembled into a finished skateboard and subjected to a final visual surface inspection, before dispatch to the customer. Depending on their visual appearance, the skateboards receive corresponding integer quality grades from 1 to 5, without intermediate grades. Grade 1 indicates a damage-free very good skateboard, up to grade 5 for skateboards with very severe surface damage. One surface appraiser is available for visual quality control in each production shift, so that in three production shifts a total of three different surface appraisers rate the skateboards with quality grades 1 to 5. The core of this Minitab tutorial will be, to check whether all three appraisers have a high level of repeatability in their own assessments, and whether all three appraisers have a sufficiently identical understanding of customer quality. And finally, it is important to check whether the team of appraisers as a whole has the same understanding of quality as the customer. In contrast to the previous training unit, in which only the two binary answer options „good“ and „bad“ were possible, in this training unit we are dealing with an appraiser agreement analysis, in which five answers are possible, which then also have a different value in relation to each other. For example, a score of 1 for very good has a completely different qualitative value, than a score of 5 for poor.

Before we get into the attributive agreement analysis, we will first learn how to create a measurement protocol layout, if characteristic carriers are available in an ordered sequence of values. We will then move into analyzing the appraiser matches with the complete data set, and learn how to evaluate the match test within the appraisers. To assess the appraiser agreements, we will also learn how we can use the so-called Fleiss-Kappa statistics, in addition to the classic match rates in percent, in order to derive a statement about the expected future match rate, with a correspondingly defined probability of error. We will then get to know the very important so-called Kendall concordance coefficient, which – in contrast to the Kappa value – not only provides an absolute statement as to whether there is a match, but can also provide a statement about the severity of the wrong decisions through a relative consideration of the deviations. With this knowledge, we will then also be able to assess the agreement rate of the appraisers’ assessments in comparison to the customer standard, and also find out how we can use the corresponding quality criteria to work out how often the appraisers were of the same opinion, i.e., whether the appraisers have the same understanding of quality. In addition to the Kendall concordance coefficient, we will also get to know the so-called Kendall correlation coefficient, which helps us to obtain additional information about whether for example, a appraiser tends to make less demanding judgments and therefore undesirably classifies a skateboard, that is inadequate from the customer’s point of view as a very good skateboard.

MAIN TOPICS MINITAB TUTORIAL 23, part 1

- Create Measurement report layout for ordered value levels

- Agreement analysis within the appraisers by using the Fleiss-Kappa statistic

- Derivation of the Kendall coefficient of the concordance

- Agreement analysis within the appraisers by using the Kendall concordance

MAIN TOPICS MINITAB TUTORIAL 23, part 2

- Derivation of the Kendall correlation coefficient

- Appraiser agreements analysis compared to the customer standard

MAIN TOPICS MINITAB TUTORIAL 23, part 3

- Agreement analysis by using the Kendall correlation coefficient

- Graphical evaluation of Appraisers repeatability and reproducibility

Trailer

23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS), PART 2

MAIN TOPICS MINITAB TUTORIAL 23, part 2

- Derivation of the Kendall correlation coefficient

- Appraiser agreements analysis compared to the customer standard

23 ATTRIBUTIVE AGREEMENT ANALYSIS (MORE THE 2 ATTRIBUTE LEVELS), PART 3

MAIN TOPICS MINITAB TUTORIAL 23, Part 3

- Agreement analysis by using the Kendall correlation coefficient

- Graphical evaluation of Appraisers repeatability and reproducibility